Ted Summer

climbing

I'm into 90s mountain bikes right now. I have a '93 raleigh m-40 currently

just kidding i have no idea how to use it properly

list format roughly follows syntax follows gemini gemfile format. will probably move further away from it as I go because its mine

you can even read the code that reads my code to put it on this site

here's where i write tests for this website

#root/Ted-Summer/things-I-like-/hyperlinks

this is a really long line I wonder how it will render. Lorem Ipsum is simply dummy text of the printing and typesetting industry. Lorem Ipsum has been the industry's standard dummy text ever since the 1500s, when an unknown printer took a galley of type and scrambled it to make a type specimen book. It has survived not only five centuries, but also the leap into electronic typesetting, remaining essentially unchanged. It was popularised in the 1960s with the release of Letraset sheets containing Lorem Ipsum passages, and more recently with desktop publishing software like Aldus PageMaker including versions of Lorem Ipsum.

what's under here?

hello from the child

fix text overflow for text on mobile

generate list data for _all_ go files (currently explicitly listed)

links between nodes?? e.g. a link `=>` could point to a node on the list, and it'd auto-open. that would be neat but would break the 'no js' rule probably.

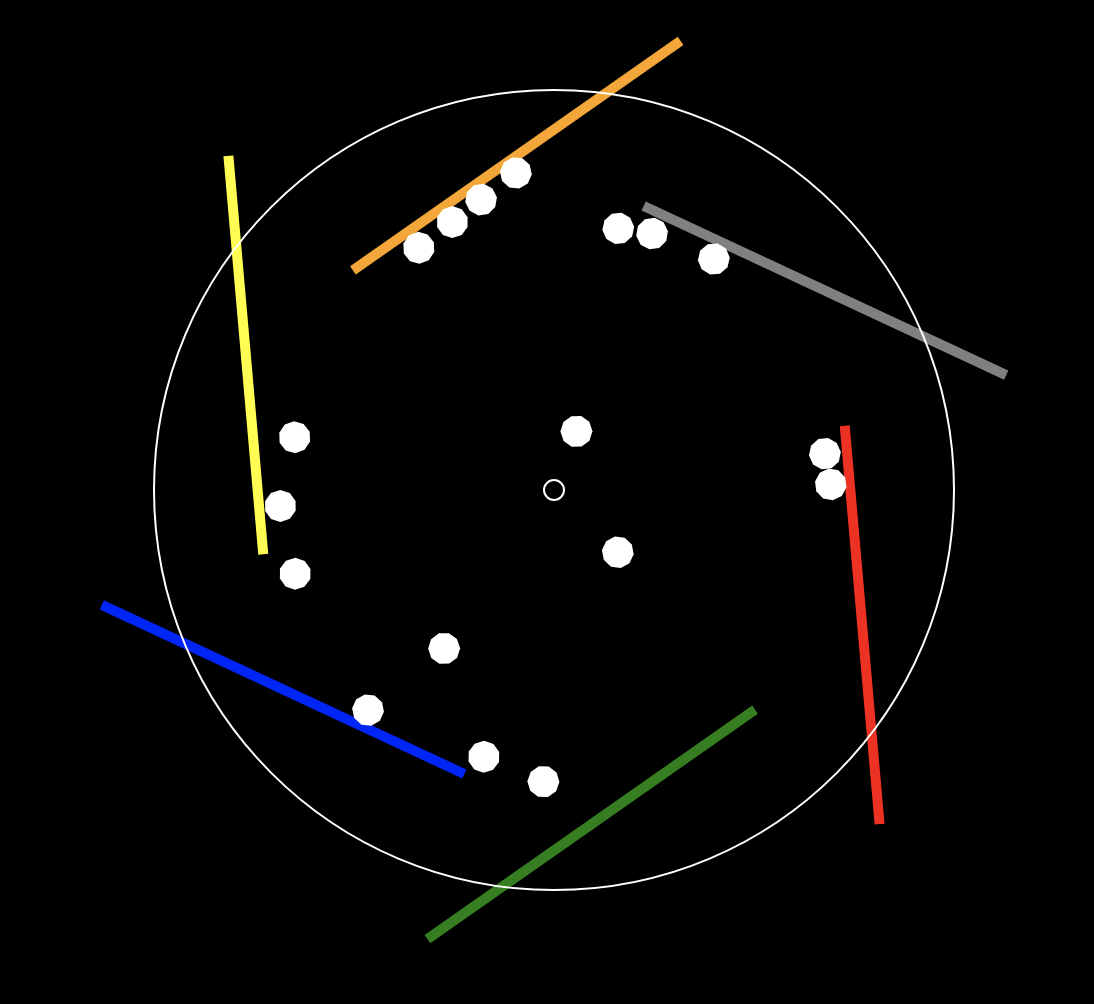

Inspired by Teenage Engineering OP-1's tombola sequencer.

https://tombola.tedsummer.com/

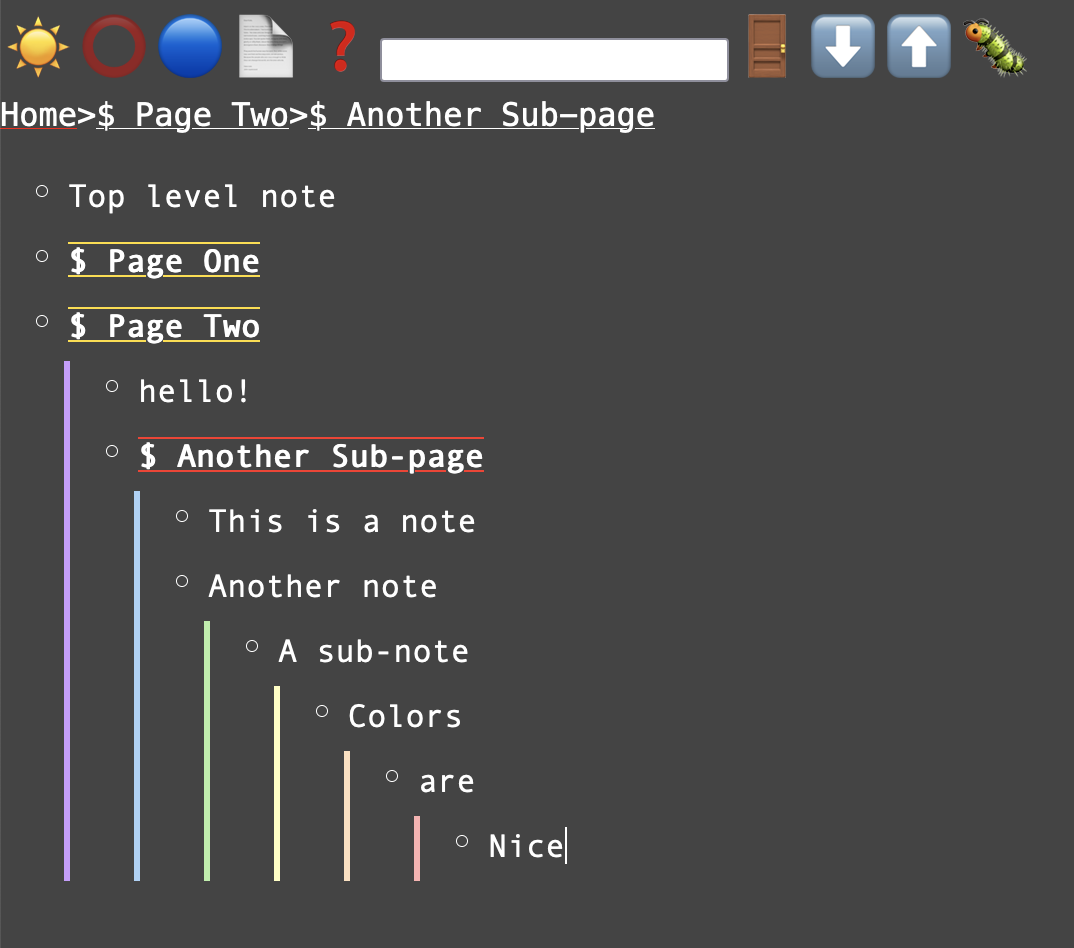

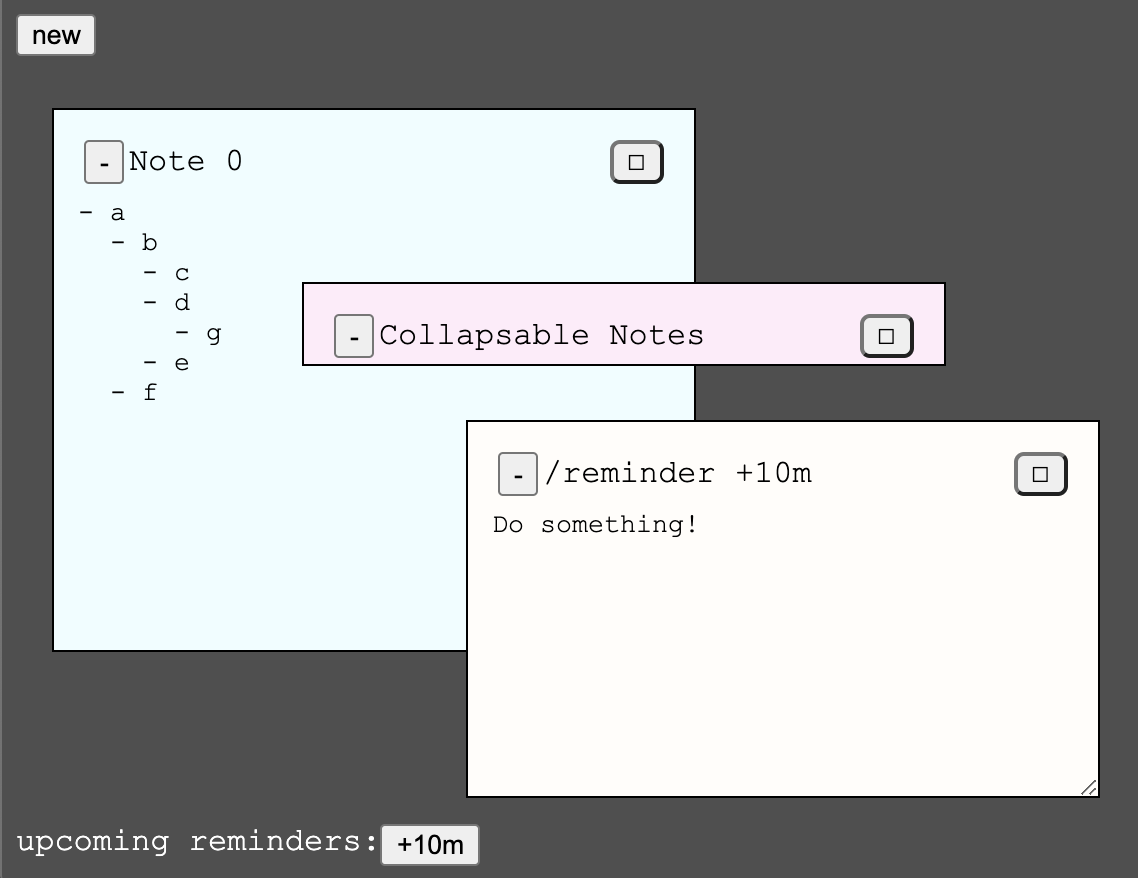

A lightweight note taking application centered around lists.

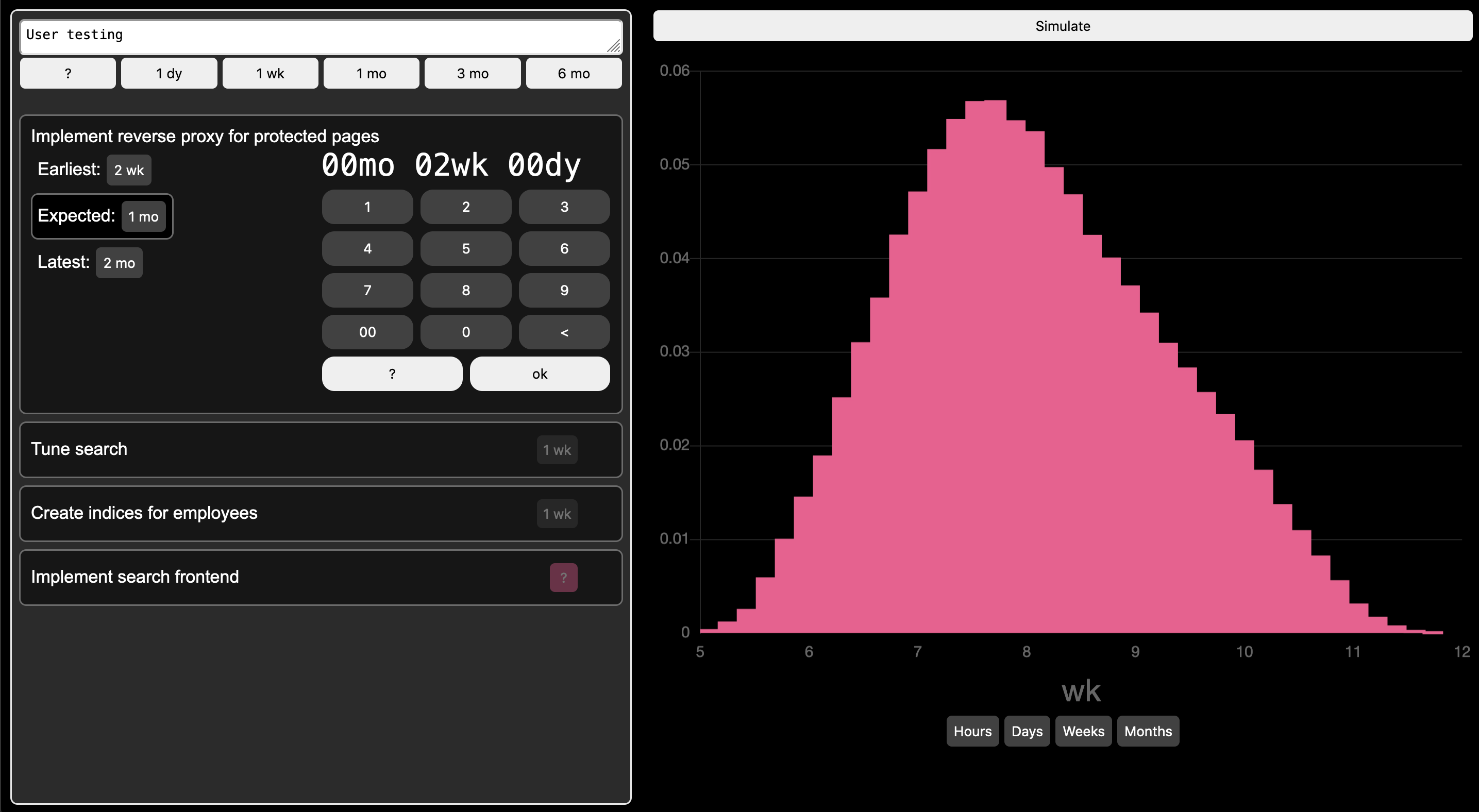

A timeline estimator for multiple tasks. Uses Monte Carlo simulations to estimate when a collection of tasks will be complete. Mostly an exercise in creating fluid UI/UX.

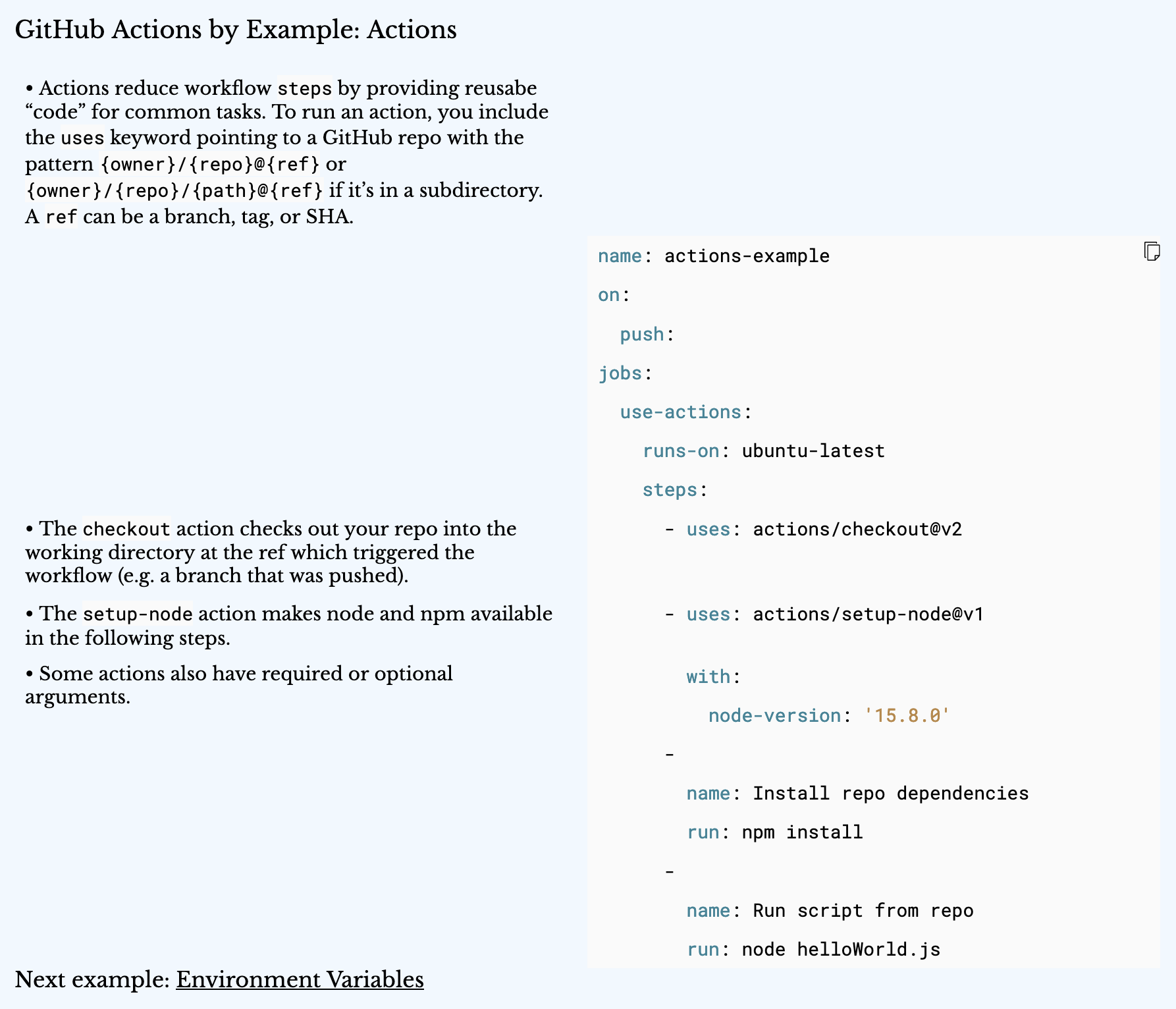

GitHub Actions by Example is an introduction to GitHub’s Actions and Workflows through annotated example YAML files. I wrote a custom HTML generator in Golang to generate the documentation from YAML files.

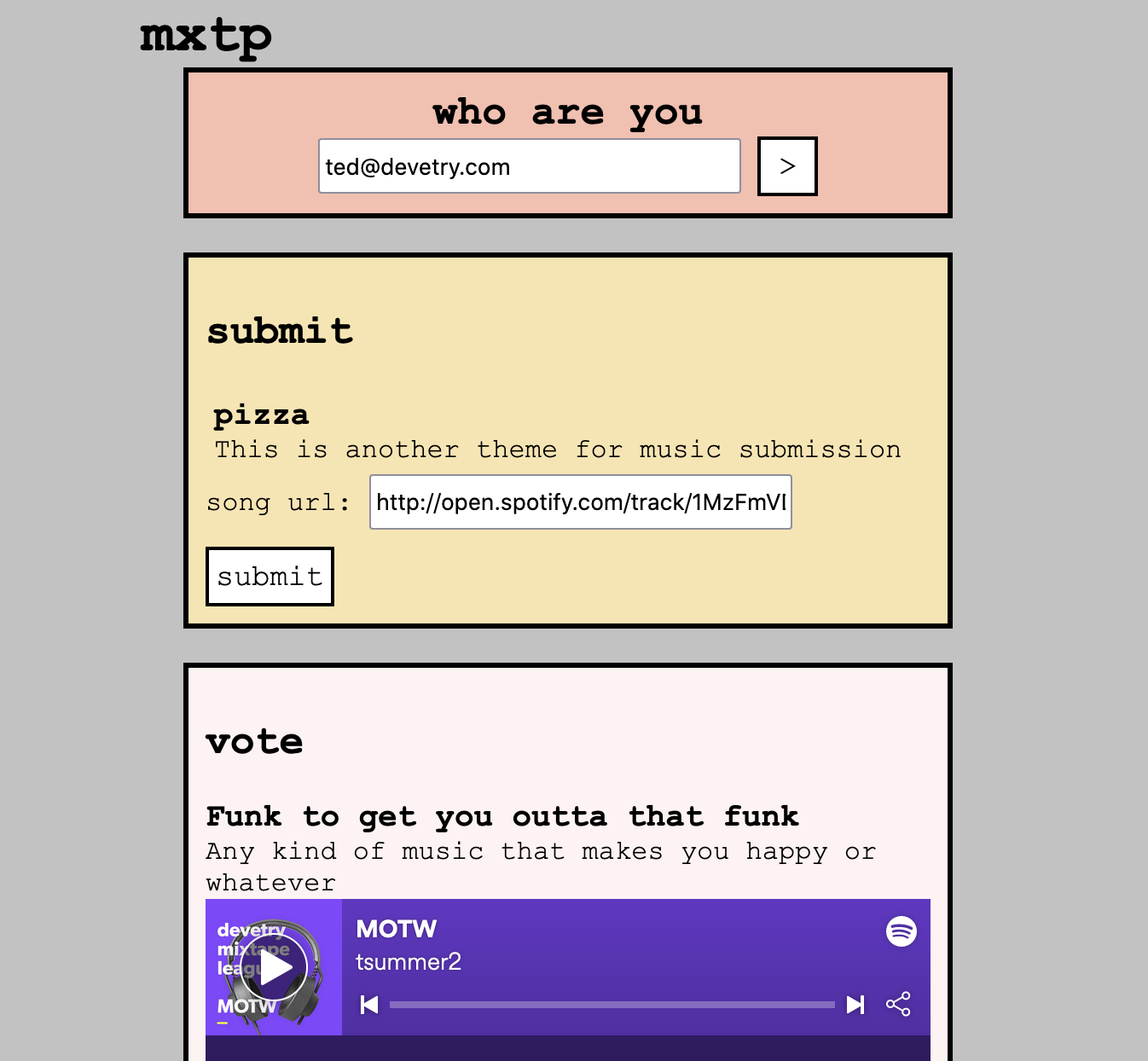

A game where players build themed music playlists with friends. Had some fun writing a custom router in Golang.

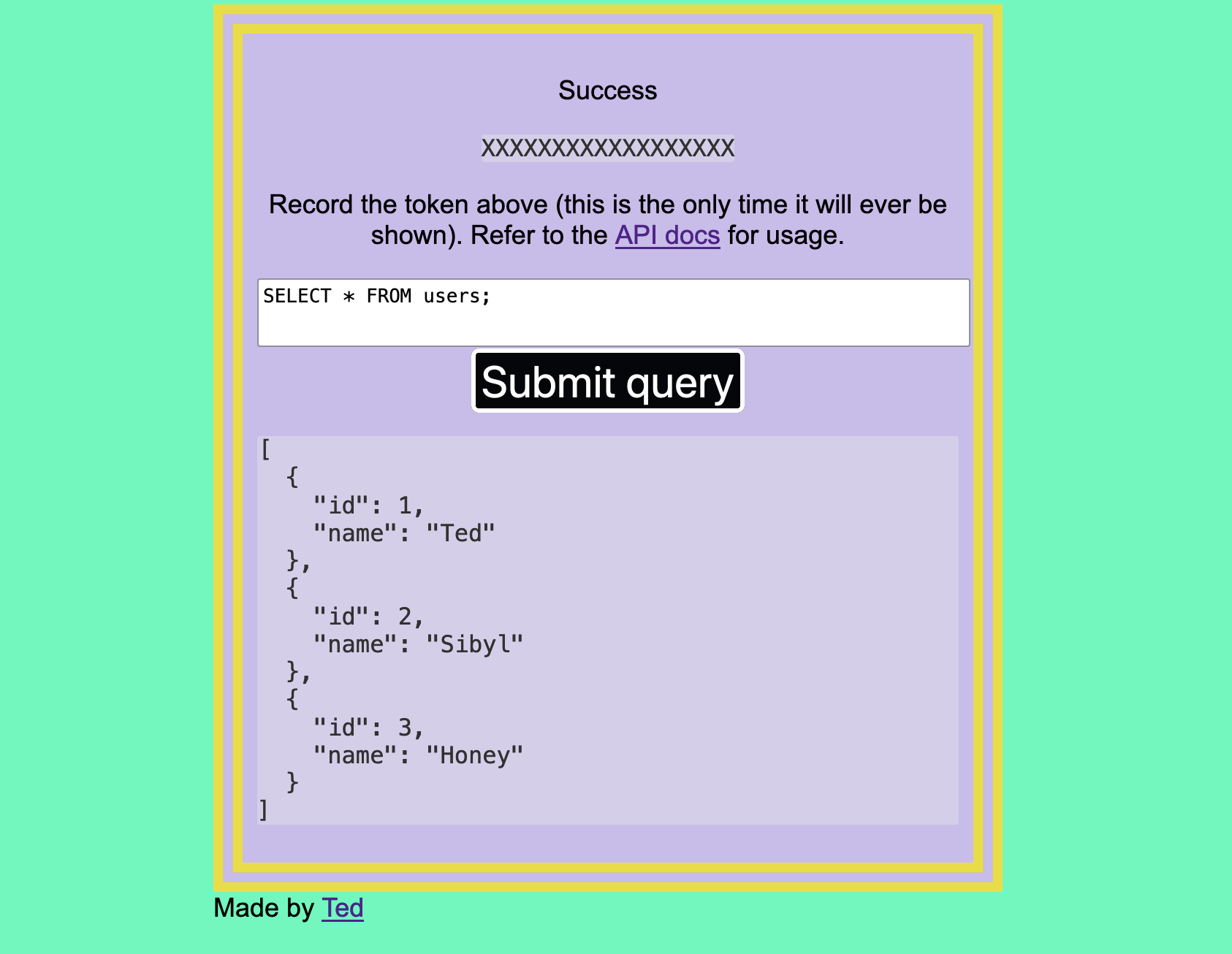

free sqlite databases. queried through HTTP API. hand made with go

https://github.com/macintoshpie/freedb

Post-it notes and scheduled reminders app.

https://jot.tedsummer.com

Twitter bot which solves another Twitter bot’s ASCII mazes. Looks like it's banned now. thanks elon ®

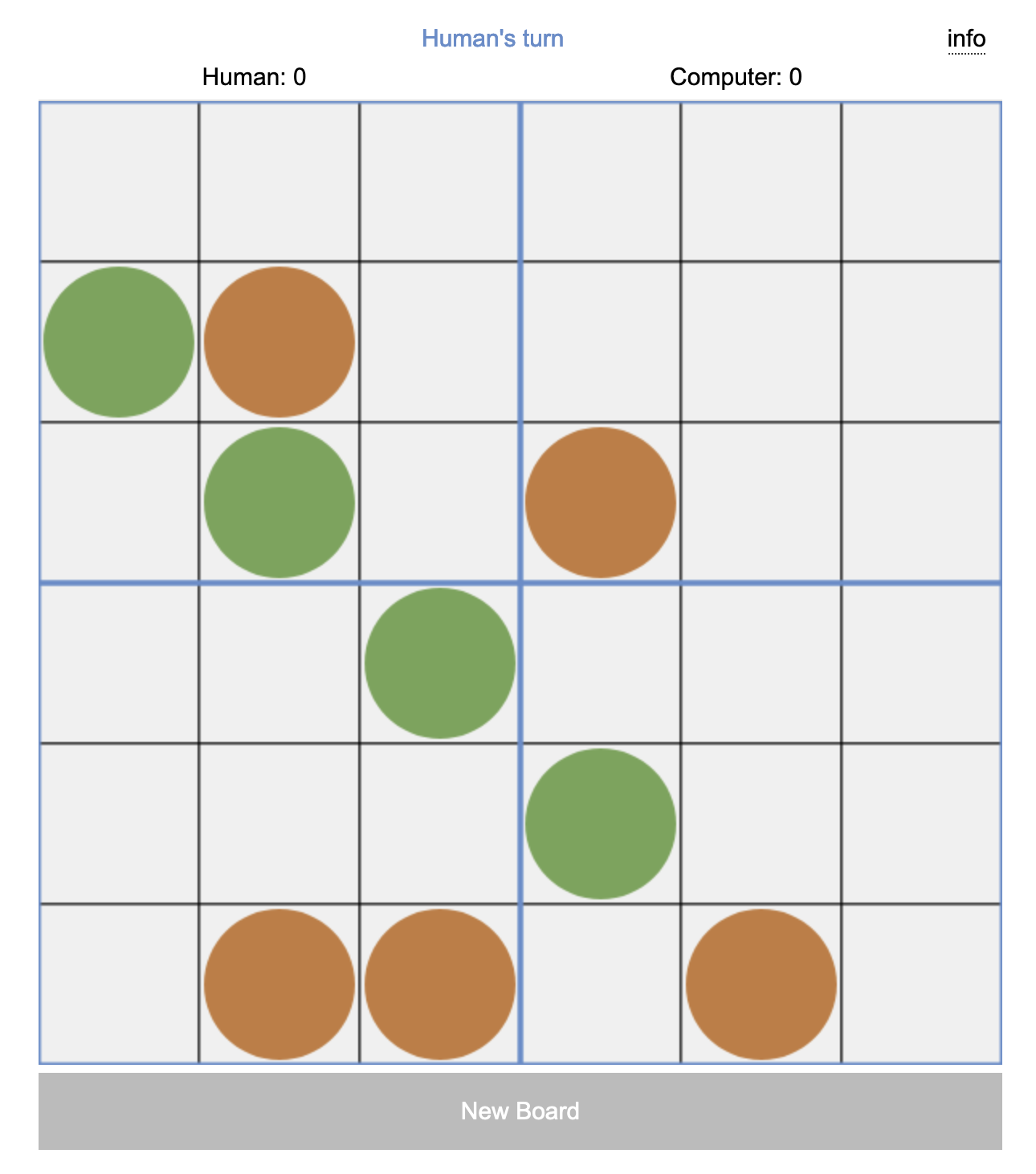

Play against a friend or naive bots in pentago, gomoku, and other grid based games.

i feel like there is and it might be a popular opinion actually. i don't currently understand why they think it's bad. if i agree with a statement, but didn't synthesize it myself, how does that impact things? books vs llms i suppose it could further encourage "fast eradication of discomfort by any means necessary" but i think that's not the same as actual self-development. *the information from llm could be bad or misunderstood (but idk if that's unique to llm vs talking to friend/reading book) *your ability to solve problems without the llm could get worse. which seems ok, but if you lose llm you might be fucked (nicotine/health argument) * = thoughts after chatting with llm about this question :) specific dates in far future or past mean nothing. we "don't care". or maybe straight up can't recall b/c it's so specific without context. it'd be cool if there was like a flower that slowly dies as "days since last updated" increases. i think im going to make thatshorts

are there people who think "self-development" using llms is bad? why

dates are hostile ui elements

e.g. someone reading my "last updated xxxx-xx-xx" date on bottom of my site and trying to determine "is there something new" or even "is this thing still used"

though i guess in a "reference" format (when did X happen) are useful (e.g. calendar lol)

looks like even apple.com has chilled out with these effects

there's probably some game dev principle about not violating expected input effects

requiring the site to turn the users mouse into a "scroll down" hint should tell you you've fucked up

it also should have used camel case

download your albums by clicking the kebab on each album and selecting "download". you could script this if you wanted. also it's async, facebook will give you a notification in app when it's ready to download.

I began trying out sourcehut because it has gemini hosting.

https://git.sr.ht/~macintoshpie

It's significantly easier to use than github pages. The docs are great and short, but I'm documenting some snippets to make copypasting things easier for myself later.

https://srht.site/automating-deployments

https://docs.github.com/en/pages/configuring-a-custom-domain-for-your-github-pages-site/managing-a-custom-domain-for-your-github-pages-site#configuring-an-apex-domainadd A records

go to repo settings and add the domain, e.g. https://github.com/user/repo/settings/pages

push to main

sometimes it takes a while for it to "verify" DNS in some cases

I wanted to make some prints on hats for a "running party" we were having. A mouse dubbed [Mr. Jiggy](https://banjokazooie.fandom.com/wiki/Jiggy) lives (lived) with us, so I wanted him as a mascot on each teams hat. So I bought some linoleum, cheap ass tools, and speedball fabric ink off amazon.

I found a chinese site that sells hat blanks, but I would not recommend it because the hats I received did not look like the advertised product. 1 star.

mr. jiggy lived in our dishwasher and while playing banjo kazooie after my roommate had a heatstroke we though it was really funny to name him that (her? we don't know).

I asked Dall-E to generate some photos of linoleum mice as a starting place then handdrew a simplified version onto the linoleum.

This worked out pretty well other than the fact that I probably made it slightly too small (~2x2 inches) and it was really hard to get the hair detail. Not much to say about the cutting.

I of course forgot that the print would be "in reverse" (flipped on horizontally) but who cares when it's a mouse. It would have been a problem if I stuck with the original plan of writing "stay sweaty" in Bosnian underneath but I scrapped that after our Bosnian friend began to explain the fact that Bosnian has gendered nouns and I didn't like the longer alternatives.

Though I just did some googling/llming and found some cool bosnian bro speak like "živa legenda" (living legend) which would have been dope.

chatgpt tells me "ajmo brate" says "lets go bro" and I found this shirt on amazon (supposedly) saying "let's go bro, sit in the tavern, order, drink, and eat, let the eyes shine from the wine, we don't live for a thousand years" which is a sentiment I appreciate

I rolled the ink on 4th of july paper plates that were too small. I will be looking for glass panes or something similar for rolling ink at the animal crossing store in future visits.

I learned that I have no idea how much ink to use, and that you should put a solid thing behind whatever you're printing on (the mesh backing left a pattern in the first print). But it does seem cool to experiment printing with some patterned texture behind the print.

I had been warned that nylon is a terrible fabric to print on but I did it anyways.

It's still not fully dry after 12 hours but whatever. we'll see. it'll probably wash out.

simpler design

bigger design (~2.5 inches)

trim off more of the excess linoleum when working with awkward printing surfaces

the white print had way too much ink I think. The black print looks wonky because I printed without a solid surface behind the fabric (the mesh behind the hat came through).

I've been messing around with a project which uses netlify and lambda (it's free and static sites are hawt). I basically have one main lambda function which handles api requests built in golang. It's pretty awesome how easy netlify lets you build and deploy, but I wanted to a nice local setup for building and testing my api server. I think aws has its own tooling for this, but I didn't really want to start fooling with it, so I came up with this.

To fix this, I created a small python proxy takes requests, converts them into API Gateway requests, forwards it to our docker container with the lambda, then converts the API Gateway response into a normal HTTP response. I _really_ struggled to get the python request handler to do all of the things I wanted, but eventually I got it working.

This could probably be improved but it's worked so far for my toy project. One significant improvement to this process would be to have the docker container auto rebuild the function whenever it changes, but I've yet to add that.

Here's a quick example of using jq in a for loop. jq has some nice functional stuff built in such as `map()`, but sometimes you need to do some fancy stuff with the data. This might be useful when you've filtered a jq array, and then need to iterate over the objects to do some work that you can't do in jq alone.

script

First, we care only about the `data` array which stores our user objects containing the URLs, so we use that object id to access it: Notice `-c` flag, it's important for looping over the objects. This tells jq to put each object onto a single line, which we'll use in the loop.## Get the objects

In bash, we can loop over lines by using the `while read -r varName; do ...; done <<< "$lineSeparatedVar"` pattern. `read -r <name>` will read in a line from STDIN, then assign the value to `<name>`; the `-r` flag tells `read` "do not allow backslashes to escape any characters".

Now we can loop over objects from our array like so

I've not fully tested this code. You may want to base64 encode the objects, then decode them if you wanna be really safe.

`curl` concurrently, toss a `&` on the end of the curl to run it as a background process

Recently I've been running through picoCTF 2018 and saw this problem that can be solved with some cool stuff from jq (a handy JSON processor for the command line).

https://stedolan.github.io/jq/

A shortened version of the provided data, `incidents.json`, is below.

Pipe it up, pipe it up, pipe it up, pipe it up

Pipe it up, pipe it up, pipe it up, pipe it up

- Migos, Pipe it up

https://www.youtube.com/watch?v=8g2KKGgK-0w

jq accepts a JSON document as input, so first we `cat` our JSON data into jq. In jq, arrays and individual elements can be piped into other functions.

The first step is pretty straight forward. We select `tickets` and group the objects the objects by their `.file_hash` attribute, giving us this:

Then we get the number of objects in each group

Then you can just pipe that array into `add / length` to calculate the average for the array

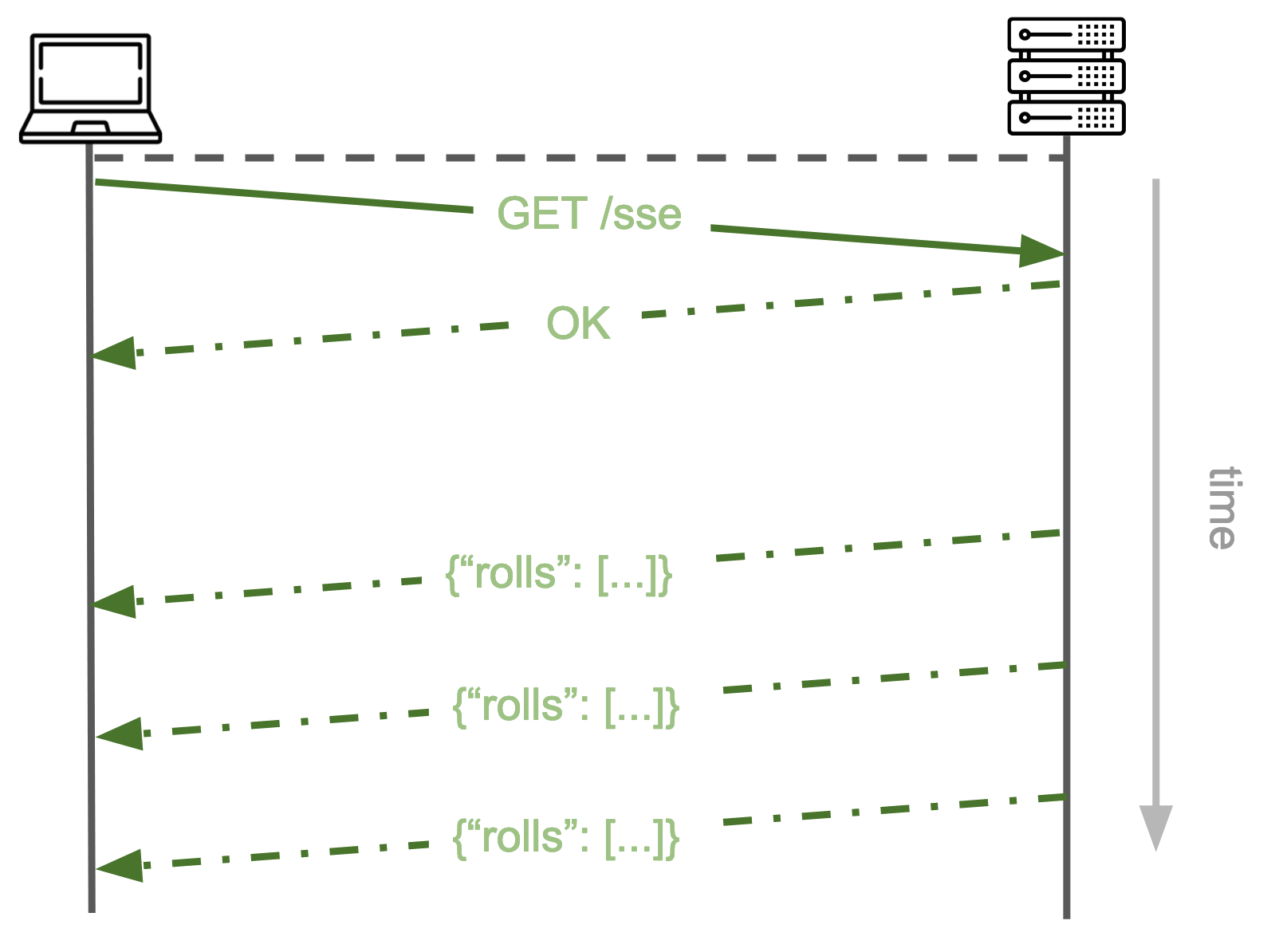

A brief introduction to server-sent events, when to use them and when not to use them. https://docs.google.com/presentation/d/1i2vT6nMrRUsmFusH8HL-0fHZUEifyniL_8q0f0pBCBg/edit?usp=sharingserver-sent events

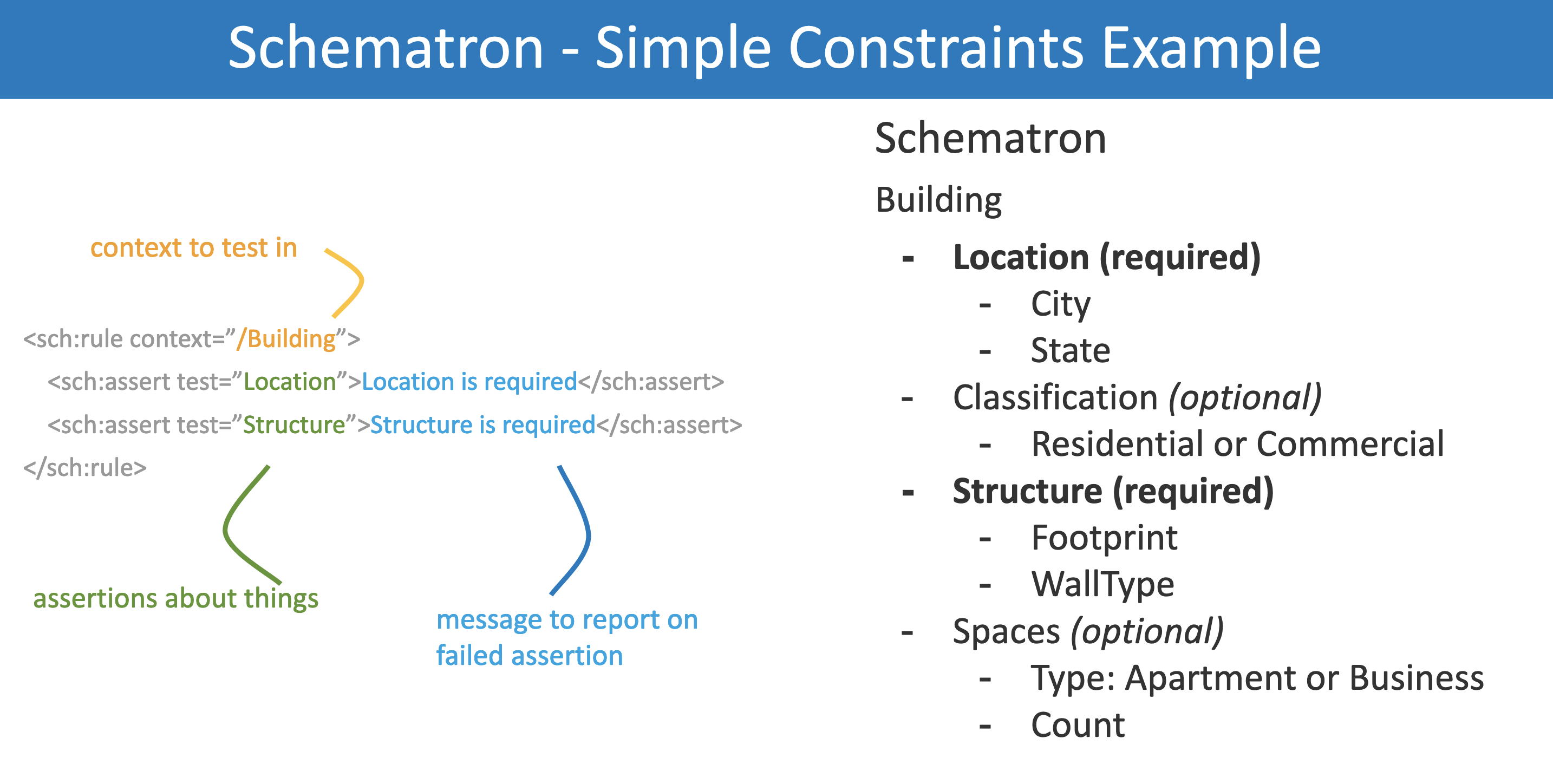

Introduction to Schematron, a language for validating XML documents.

University of Chicago, 3.9 / 4.0, 2018-2019 Algorithms, C Programming, Operating Systems, Networks, Parallel Programming, Big Data, Application Security, Intro to Computer Systems, Discrete Math Furman University, 3.48 / 4.0, 2012-2016education

M.S. in computer science

B.S. double major neuroscience & chinese studies

February 2022 - September 2024

Bringing the the next billion software creators online.

Solving complex problems for clients with custom software and codebase improvements (Python, Django, Golang, JavaScript, XML Schema, PHP) Tech lead for the rebuilding of the Devetry website (Netlify, React)February 2022 - September 2024

January 2019 - June 2019

Created Python package which automates the process of deploying, running, and optimizing arbitrary programs

Used Bayesian Optimization to significantly reduce the amount of time required optimize tool configuration

Created RESTful web service for running jobs with the package on AWS and storing results using Flask, Redis, Docker Compose and PostgreSQL

May 2018 - May 2019

Used Node.js, Groovy, Bash, and Docker to develop tools and automation for Kubernetes management and CI/CD pipelines in Jenkins

Created custom canary rollout method using Kubernetes, JavaScript, and NGINX

Refactored, enhanced, and fixed previous bugs in Django web application backend

Designed and created a custom survey frontend using vanilla JavaScript, primarily targeted at mobile use

Created tools and statistical analysis reports on data collected through the platform using Pandas

June 2016 - July 2017

Created data processing pipelines for organizing, cleaning, and merging eye tracking, EEG and behavioral data using Jupyter notebooks, Pandas, Numpy, and matplotlib

Created an embedded database application in Java with functional GUI for more effective recruitment

watever

cmd

build

main.go

cmd

runDev

main.go

build.go

server.go

highlighter.go

monitor.go

fileTreeParser.go

parser.go

html.go

list.go

renderer.go

xml.go

walker.go